OLI Psychology is not your typical course. Our goal is for you to work through the course

materials online on your own time and in the way that is most efficient given your prior

knowledge.

While you will have more flexibility than you do in a traditional course, you will also

have more responsibility for your own learning. You will need to:

- Plan how to work through each unit.

- Determine how to use the various features of the course to help you learn.

- Decide when you need to seek additional support.

What You Need to Know About Each Unit

Each unit in this course has features designed to support you as an independent learner,

including:

-

Explanatory content: This is the

informational “meat” of every unit. It consists of short passages of

text with information, images, explanations, and short videos.

-

Learn By Doing activities: Learn By

Doing activities give you the chance to practice the concept that you

are learning, with hints and feedback to guide you if you struggle.

-

Did I Get This? activities: Did I Get

This? activities are your chance to do a quick "self-check" and assess

your own understanding of the material before doing a graded activity.

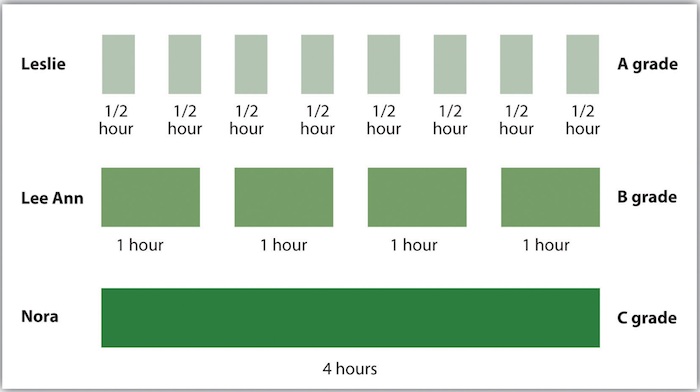

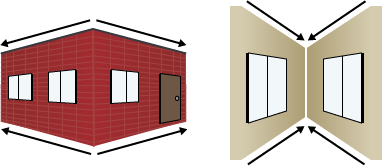

Completing this course efficiently and effectively

When starting an online course, most people neglect planning, opting instead to

jump in and begin working. While this might seem efficient (after all, who wants

to spend time planning when they could be doing?), it can ultimately be

inefficient. In fact, one of the characteristics that distinguishes experts

from novices is that experts spend far more time planning their approach to a

task and less time actually completing it; while novices do the reverse: rushing

through the planning stage and spending far more time overall.

In this course, we want to help you work as efficiently and effectively as

possible, given what you already know. Some of you have already taken a

psychology course, and are already familiar with many of the concepts. You may

not need to work through all of the activities in the course; just enough to

make sure that you've "got it." For others, this is your first exposure to

psychology, and you will want to do more of the activities, since you are

learning these concepts for the first time.

Improving your planning skills as you work through the material in the course

will help you to become a more strategic and thoughtful learner and will enable

you to more effectively plan your approach to assignments, exams and projects in

other courses.

Metacognition

This idea of planning your approach to the course before you start is called

Metacognition.

- Metacognition

-

(Definition)

Metacognition, or “thinking about thinking,” refers to your

awareness of yourself as a learner and your ability to regulate your

own learning.

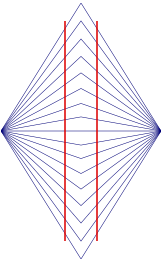

Metacognition involves five

distinct skills:

-

Assess the task—Get a handle on what is involved in

completing a task (the steps or components required for success) and any

constraints (time, resources).

-

Evaluate your strengths and weaknesses—Evaluate your own

skills and knowledge in relation to a task.

-

Plan an approach—Take into account your assessment of the

task and your evaluation of your own strengths and weaknesses in order to

devise an appropriate plan.

-

Apply strategies and monitor your performance—Continually

monitor your progress as you are working on a task, comparing where you are

to the goal you want to achieve.

-

Reflect and adjust if needed—Look back on what worked and

what didn't work so that you can adjust your approach next time and, if

needed, start the cycle again.

These five skills are applied over and over again in a cycle—within the

same course as well as from one course to another:

Mouse over the individual skills to learn more.

Example

Metacognition in Action

You get an assignment and ask yourself: “What exactly does this assignment involve

and what have I learned in this course that is relevant to it?”

You are exercising metacognitive skills (1) and (2) by assessing the task and

evaluating your strengths and weaknesses in relation to it.

If you think about what steps you need to take to complete the assignment and

determine when it is reasonable to begin, you are exercising skill (3) by

planning.

If you start in on your plan and realize that you are working more slowly

than you anticipated, you are putting skill (4) to work by applying a

strategy and monitoring your performance.

Finally, if you reflect on your performance in relation to your timeframe for

the task, and discover an equally effective but more efficient way to work,

you are engaged in skill (5); reflecting and adjusting your approach as

needed.

Example

Joe's Learning Strategies

Metacognition is not rocket science. In some respects, it is fairly ordinary and

intuitive. Yet you’d be surprised how often people lack strong metacognitive skills; and

you’d be amazed by how much weak metacognitive skills can undermine performance.

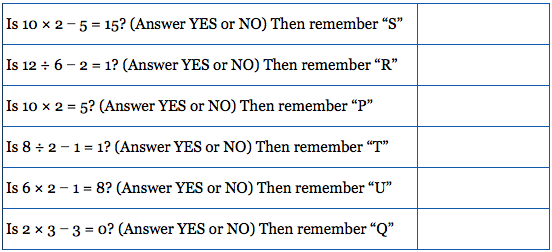

Learn By Doing

Now take the opportunity to practice the concepts you've been learning by doing

these two Learn By Doing activities. Read each of the scenarios below and

identify which metacognitive skill the student is struggling with. If you need

help, remember that you can ask for a hint.

You've now read through the explanatory content in this unit, and you've had a chance to

practice the concepts. Take a moment to reflect on your understanding. Do you feel like

you are "getting it"? Use these next two activities to find out.

Strong metacognitive skills are essential for independent learning, so use the experience

of monitoring your own learning in OLI Psychology as an opportunity to hone these skills

for other classes and tasks.

References

Ambrose, S. A., Bridges, M. W., DiPietro, M., Lovett, M. C., & Norman, M. K.

(2010). How learning works: 7 research-based principles for

smart teaching. San Francisco: Jossey-Bass.

Chi, M. T. H., Bassock, M., Lewis, M. W., Reimann, P., & Glaser, R. (1989).

"Self-explanations: How students study and use examples in learning to solve

problems." Cognitive Science, 13, 145-182.

Dunning, D. (2007). Self-insight: Roadblocks and detours on

the path to knowing thyself. New York: Taylor and Francis.

Hayes, J. R., & Flower, L. S. (1986). "Writing research and the writer." American Psychologist Special Issue: Psychological Science

and Education, 41, 1106-1113.

Schoenfeld, A. H (1987). "What’s all the fuss about metacognition?" In A. H.

Schoenfeld (Ed.), Cognitive science and mathematics

education. (pp.189-215). Hillsdale, NJ: Erlbaum.

This Introduction to Psychology course was developed as part of the Community College Open

Learning Initiative. Using an open textbook from Flatworld Knowledge as a foundation,

Carnegie Mellon University's Open Learning Initiative has built an online learning

environment designed to enact instruction for psychology students.

|

The Open Learning Initiative (OLI) is a grant-funded group at Carnegie Mellon University,

offering innovative online courses to anyone who wants to learn or teach. Our aim is to

create high-quality courses and contribute original research to improve learning and

transform higher education by:

- Supporting better learning and instruction with high-quality, scientifically-based,

classroom-tested online courses and materials.

- Sharing our courses and materials openly and freely so that anyone can learn.

- Developing a community of use, research, and development.

|

|

|

Flatworld Knowledge is a college

textbook publishing company on a mission. By using technology and innovative business

models to lower costs, Flatword is increasing access and personalizing learning for college

students and faculty worldwide. Text, graphics and video in this course are built on materials by

Flatworld Knowledge, made available

under a CC-BY-NC-SA

license. Interested in a companion text for this course? Flatworld provides access

to the original textbook online and makes digital and print copies of the original

textbook available at a low cost.

|

Welcome to world of psychology. This course will introduce you to

some of the most important ideas, people, and research methods from the field of

Psychology. You probably already know about some people, perhaps Sigmund Freud or B. F.

Skinner, who contributed to our understanding of human thought and behavior, and you may

have learned about important ideas, such as personality testing or methods of

psychotherapy. This course will give you the opportunity to refine and organize the

knowledge you bring to the class, and we hope that you will learn about theories,

phenomena, and research results that give you new insight into the human condition.

This first module is your opportunity to explore the field of psychology for a while before

moving into material that will be assessed and tracked. Let’s start with an obvious

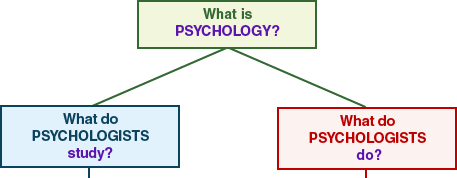

question:

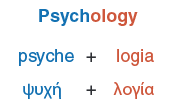

The word psychology is based on two words from the Greek language:

psyche, which means “life” or, in a more restricted sense

“mind,” or “spirit,” and logia, which is the source for the current

meaning, “the study of…”

Whatever the origin of the word, over the years, philosophers, scientists, and other

interested people have debated about the “proper” subject matter for psychology. Should we

focus on actual behavior, which we can observe and even measure, or on the mind, which

includes the rich inner experience we all have of the world and of our own thoughts and

motives, or on the brain, which is the centerpiece of the physical systems that make

thought and behavior possible? And psychology is not just a bunch of fancy theories.

Psychology is a vibrant, growing field because psychologists’ ideas and skills are used

every day in thousands of ways to solve real-world problems.

To start your introduction to psychology, first survey the range of topics you will be

studying in this course. The section on “What do psychologists study?” reviews the scope of

topics covered in this course and allows you to see some of the general themes the various

units develop. When you are finished with your survey of topics, the section on “What do

psychologists do?” gives you some sense of the scope of psychological work and professional

fields where psychological training is essential.

Your work in the rest of this module will also introduce you to one of the essential

features of this course: Learning by Doing. Research and experience tell us that active

involvement in learning is far more effective than mere passive reading or listening.

Learning by Doing doesn’t need to be complicated or difficult. It simply requires that you

get out of automatic mode and think a bit about the ideas you are encountering. We will get

you to participate a bit in your introduction to the online materials and to the field of

psychology, so you can Learn by Doing.

You will also encounter another type of activity: Did I Get This? These brief quizzes allow

you to determine if you are on track in your understanding of the material. Take the Did I

Get This? quiz after each section of the module. If you do well, you might decide that you

have mastered the material enough to go on. However, keep in mind that you, not the quiz,

should decide if you are ready. The quiz is just there to help you.

Before you leave a particular section of a module, you will usually have the opportunity to

check your knowledge. The activities called Did I Get This? are brief quizzes about the

material you have just been studying. Use them to monitor your understanding of the

material before moving on.

You now know that psychology is a big field and psychologists are interested in a great

variety of issues. In order to introduce you to so much material, we will often have to

focus on specific issues or problems and on an experiment or theory that addresses issue or

problem. Much of this research is conducted in university laboratories. This may give you

the impression that psychologists only work in universities, conducting experiments with

undergraduate psychology students. It is true that a lot of research takes place in

university labs, but the majority of psychologists work outside of the university

setting.

The next Learn by Doing will give you a chance to consider different ways that people with

training in psychology use their skills. In the second module of this introductory unit, we

will explore the various areas of psychology more systematically, so this is just a first

look at the scope of the work psychologists do.

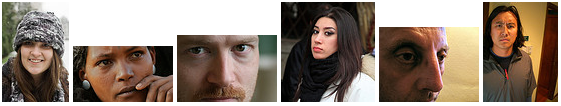

learn by doing

Your task is to categorize the work of each of our seven psychologists as best

fitting basic research, mental health, or applied psychology. In the real world, a

single individual might work in two or all three of these areas, but, for the sake of

our exercise, find the best fit for the description. Answer by clicking on the

appropriate box below.

Images in this activity courtesy of Kim J, Matthews NL, Park S (CC-BY-2.5), FrozenMan (Public Domain), and Brian J. McDermott (CC-BY-2.0).

In the next module of this introductory unit you will learn a bit about the history of

psychology and some of the major issues that influence psychological thinking.

In this module we review some of the important philosophical questions that psychologists

attempt to answer, the evolution of psychology from ancient philosophy and how

psychology became a science. You will learn about the early schools (or approaches) of

psychological inquiry and some of the important contributors to each of these early

schools of psychology. You will also learn how some of these early schools influenced

the newer contemporary perspective of psychology.

The approaches that psychologists originally used to assess the issues that interested

them have changed dramatically over the history of psychology. Perhaps most importantly,

the field has moved steadily from speculation about the mind and behavior toward a more

objective and scientific approach as the technology available to study human behavior

has improved. There has also been an increasing influx of women into the field. Although

most early psychologists were men, now most psychologists, including the presidents of

the most important psychological organizations, are women.

Questions That Psychologists Ask

Although psychology has changed dramatically over its history, several questions that

psychologists address have remained constant and we will discuss them both here

and in the units and modules to come:

-

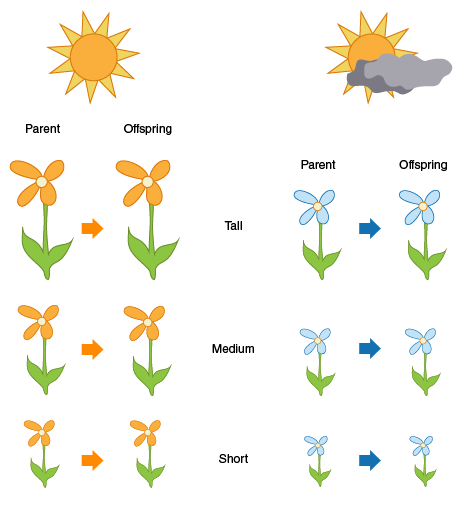

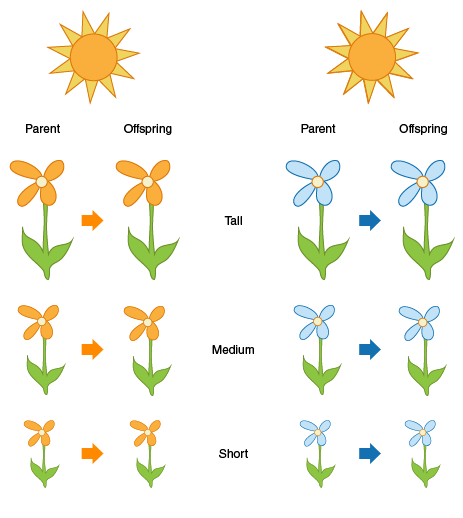

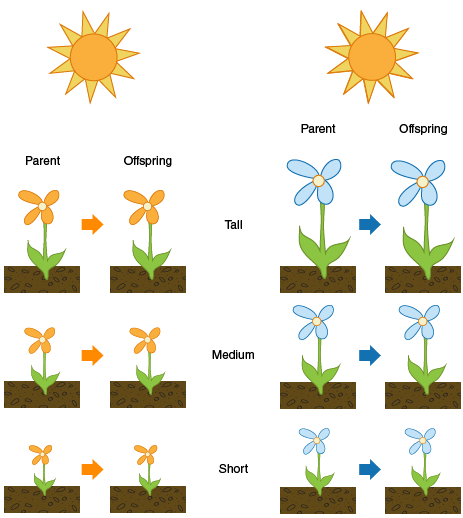

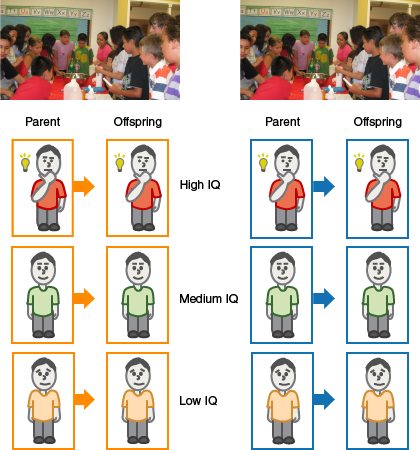

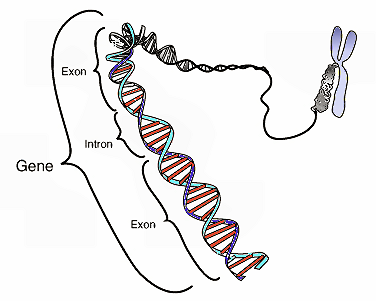

Nature versus nurture. Are genes or environment most influential in

determining the behavior of individuals and in accounting for differences

among people? Most scientists now agree that both genes and environment play

crucial roles in most human behaviors, and yet we still have much to learn

about how nature (our biological makeup) and nurture (the experiences that

we have during our lives) work together. In this course we will see that

nature and nurture interact in complex ways, making the question “Is it

nature or is it nurture?” very difficult to answer.

-

Mind versus Body.How is the mind—our thoughts, feelings, and

ideas—related to the body and brain? Are they the same, or are they

different and separate entities? The relationship between the mind and

body/brain has been debated for centuries and the early predominate belief

was that the mind and body were separate entities. This belief became known

as the mind-body dualism in which the body is physical and the mind is nonphysical, mysterious, and

somehow controls the body. Others believe that the mind and body

are not separate in that the mind is a result of activity in the brain. For

example, we know that the body can influence the mind such as when feelings

of passion or other emotions take over our better judgment. Just like the

debate of nature versus nurture, the debate of mind versus body does not

have an either-or answer; we know today that the mind and body are

intricately intertwined. Today biological psychologists are most interested

in this reciprocal relationship between biology and behavior.

-

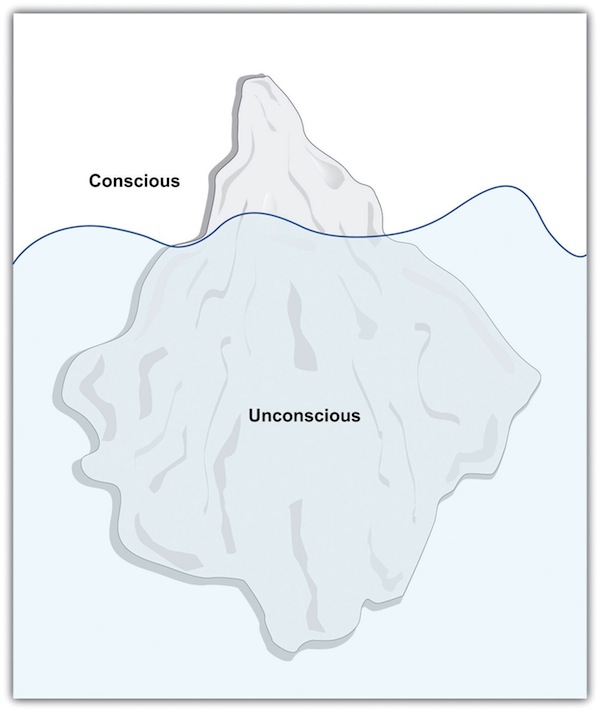

Automatic versus controlled processing. One of the great

contributions of Sigmund Freud to psychology was his emphasis on unconscious

mental activities that influence and even control our thoughts and

behaviors. Now, in the age of neuroscience, Freud’s ideas have been

radically updated and revised. Much of our normal thinking, perceiving, and

acting occurs through brain processes that work automatically, often because

we have learned to do them so well and practiced them so much that they

occur whether or not we are aware of their occurrence. For example, as you

read these words, your visual system quickly and effortlessly translates

black lines on a white background into words that have meaning. This is

automatic processing, and you can’t avoid it. (Look at

the following word but do not read it: FORK). However, you can also process information in a

slow, analytic way, focusing attention on an object or an idea. For example,

if I ask you how many different meanings the word “bank” has, the answer

comes slowly and you probably use conscious strategies to search your mind

for possible meanings (“Hmm, the side of a river and a

place you put money. Oh, and the inward tilting movement of an airplane.

And an elevated part of the ocean floor. And...."). The study of

automatic and controlled processes is an important part of our study of

child and adolescent development, clinical conditions, and communication

through language, to name just a few areas of application.

-

Differences versus similarities. To what extent are we

all similar, and to what extent are we different? For instance, are there

basic psychological and personality differences between men and women, or

are men and women by and large similar? And what about people from different

ethnicity and cultures? Are people around the world generally the same, or

are they influenced by their backgrounds and environments in different ways?

Personality, social, and cross-cultural psychologists attempt to answer

these classic questions.

learn by doing

Directions: Read each scenario and answer the questions

about how each situation might be viewed by a psychologist.

Scenario 1: Alex and Julie, his girlfriend, are having a

discussion about aggression in men and women. Julie thinks that males are much

more aggressive than females because males have more physical fights and get

into trouble with the law than females. Alex does not agree with Julie and tells

her that even though males get into more physical fights, he thinks that females

are much more aggressive than males because females engage more in gossip,

social exclusion, and spreading of malicious rumors than men. Is Alex or Julie’s

thinking about aggression correct?

Scenario 2: Your genetics give you certain physical traits

and cognitive capabilities. You are great at math but may never be an artist.

Sometimes in life, we have to accept the traits and abilities that have been

given to us, even though you may wish you were different.

Scenario 3: Raul is having a discussion with his mother

about his father, Tomas, and his brother, Hector. Tomas, the father, is an

alcoholic and Raul expresses to his mother that he is concerned about his

brother, Hector, who is also beginning to drink a lot. Raul does not want his

brother to turn out like his father. Raul’s mother tells him that there have

been several men in the family who are alcoholics such as his grandfather and

two uncles. She says that it runs in the family and that his brother, Hector,

can’t help himself and he will also be an alcoholic. Raul responds that he

thinks they could stop drinking if they wanted too, despite the history of

alcoholism in the family. “After all, look at me. I don’t drink and my friends

don’t either. I don’t think I will become an alcoholic because I have friends

who know how to control themselves."

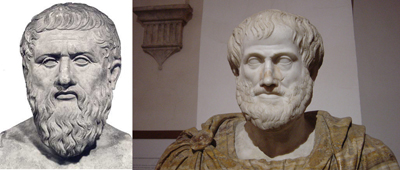

The earliest psychologists that we know about are the Greek philosophers Plato

(428–347 BC) and Aristotle (384–322 BC). These philosophers asked many of the same

questions that today’s psychologists ask; for instance, they questioned the

distinction between nature and nurture and mind and body. For example, Plato argued

on the nature side, believing that certain kinds of knowledge are innate or inborn,

whereas Aristotle was more on the nurture side, believing that each child is born as

an “empty slate” (in Latin a tabula

rasa) and that knowledge is primarily acquired through sensory learning

and experiences.

European philosophers continued to ask these fundamental questions during the

Renaissance. For instance, the French philosopher, René Descartes (1596–1650)

influenced the belief that the mind (the mental aspects of life) and body (the

physical aspects of life) were separate entities. He argued that the mind controls

the body through the pineal gland in the brain (an idea that made some sense at the

time but was later proved incorrect). This relationship between the mind and body is

known as the mind-body dualism in which the mind is fundamentally different from the

mechanical body, so much so that we have free will to choose the behaviors that we

engage in. Descartes also believed in the existence of innate natural abilities

(nature).

Another European philosopher, Englishman John Lock (1632–1704), is known for his

viewpoint of empiricism, the belief that the

newborn’s mind is a “blank slate” and that the accumulation of experiences mold

the person into who he or she becomes.

The fundamental problem that these philosophers faced was that they had few methods

for collecting data and testing their ideas. Most philosophers didn’t conduct any

research on these questions, because they didn’t yet know how to do it and they

weren’t sure it was even possible to objectively study human experience. Philosophers

began to argue for the experimental study of human behavior.

Gradually in the mid-1800s, the scientific field of psychology gained its

independence from philosophy when researchers developed laboratories to examine and

test human sensations and perceptions using scientific methods. The first two

prominent research psychologists were the German psychologist Wilhelm Wundt

(1832–1920), who developed the first psychology laboratory in Leipzig, Germany in

1879, and the American psychologist William James (1842–1910), who founded an

American psychology laboratory at Harvard University.

| The Early Schools of Psychology: No Longer Active |

|---|

| From Flat World Knowledge: Adapted from Introduction to Psychology, v1.0. CC-BY-NC-SA. |

| School of Psychology |

Description |

Earliest Period |

Historically Important People |

| Structuralism |

Uses the method of introspection to identify the basic elements of "structure" of

psychological experiences. |

Late 19th Century |

Wilhelm Wundt, Edward B. Titchener |

| Functionalism |

Inspired by Darwin's work in biology. Attempted to explain behavior, emotion, and

thought as active adaptations to environmental pressures. These ideas influenced

later behaviorism and evolutionary psychology. |

Late 19th Century |

William James, John Dewey |

|

| Early Schools of Psychology: Still Active and Advanced Beyond Early Ideas |

|---|

| From Flat World Knowledge: Adapted from Introduction to Psychology, v1.0. CC-BY-NC-SA. |

| School of Psychology |

Description |

Earliest Period |

Historically Important People |

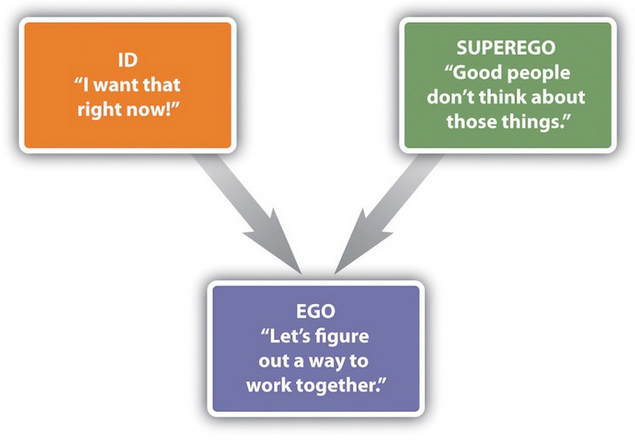

| Psychodynamic Psychology |

Focuses on the role of our unconscious thoughts, feelings, and memories and our

early childhood experiences in determining behavior. Modern psychodynamic psychology

has built on Freud's original ideas, and it has also influenced modern

neuroscience. |

Very late 19th to Early 20th Century |

Sigmund Freud, Erik Erikson |

| Behaviorism |

Based on the premise that it is not possible to objectively study the mind.

Therefore, psychologists should limit their attention to the study of behavior

itself. Contemporary behaviorism is an active field increasingly integrated with

cognitive-neuroscience. |

Early 20th Century |

Ivan Pavlov, John B. Watson, B. F. Skinner |

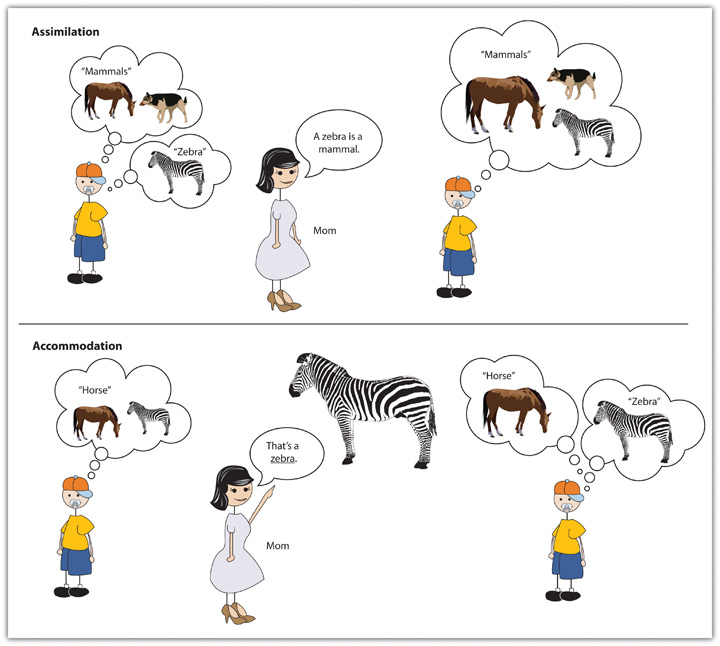

| Cognitive Development |

Studies the growth of thought and language processes in infants and children.

Emphasizes the idea that children are not incompetent adults but think creatively and

effectively based on their limited experience in the world. Modern cognitive

psychology owes a great deal to these early cognitive development researchers. |

1920s |

Jean Piaget, Lee Vygotsky |

| Humanistic Psychology |

Emphasizes the development of a healthy, effectively functioning person. Rejects the

idea that clinical psychology and other applied areas should focus only on disorders

and problems. This school developed ideas of self-actualization, personal

responsibility, and human potential. Contemporary positive psychology has been

strongly influence by humanistic psychology. |

1950s |

Abraham Maslow, Carl Rogers |

|

Stucturalism: The Influence of Chemistry and Measurement

Wundt’s research in his laboratory in Liepzig focused on the nature of consciousness

itself. Wundt and his students believed that it was possible to analyze the basic

elements of the mind and to classify our conscious experiences scientifically. This

focus developed into the field known as structuralism, a school of psychology whose goal was to identify the basic

elements or “structures” of psychological experience. Its goal was to create

a “periodic table” of the “elements of sensations,” similar to the periodic table of

elements that had recently been created in chemistry.

Structuralists used the method of introspection in an attempt to create a map of the

elements of consciousness. Introspection involves asking research participants to describe exactly what they

experience as they work on mental tasks, such as viewing colors, reading a

page in a book, or performing a math problem. A participant who is reading a book

might report, for instance, that he saw some black and colored straight and curved

marks on a white background. In other studies the structuralists used newly invented

reaction time instruments to systematically assess not only what the participants

were thinking but how long it took them to do so. Wundt discovered that it took

people longer to report what sound they had just heard than to simply respond that

they had heard the sound. These studies marked the first time researchers realized

that there is a difference between the sensation of a

stimulus and the perception of that stimulus, and the idea of

using reaction times to study mental events has now become a mainstay of cognitive

psychology.

Perhaps the best known of the structuralists was Edward Bradford Titchener

(1867–1927). Titchener was a student of Wundt who came to the United States in the

late 1800s and founded a laboratory at Cornell University. In his research using

introspection, Titchener and his students claimed to have identified more than 40,000

sensations, including those relating to vision, hearing, and taste.

An important aspect of the structuralist approach was that it was rigorous and

scientific. The research marked the beginning of psychology as a science, because it

demonstrated that mental events could be quantified. But the structuralists also

discovered the limitations of introspection. Even highly trained research

participants were often unable to report on their subjective experiences. When the

participants were asked to do simple math problems, they could easily do them, but

they could not easily answer how they did them. Thus the

structuralists were the first to realize the importance of unconscious processes—that

many important aspects of human psychology occur outside our conscious awareness and

that psychologists cannot expect research participants to be able to accurately

report on all of their experiences. Introspection was eventually abandoned because it

was not a reliable method for understanding psychological processes.

Functionalism: The Influence of Biology

In contrast to structuralism, which attempted to understand the nature of

consciousness, the goal of William James and the other members of the school of functionalism was to understand why animals

and humans have developed the particular psychological aspects that they currently

possess. For James, one’s thinking was relevant only to one’s behavior. As he

put it in his psychology textbook, “My thinking is first and last and always for the

sake of my doing.”

James and the other members of the functionalist school were influenced by Charles

Darwin’s (1809–1882) theory of natural selection, which

proposed that the physical characteristics of animals and humans

evolved because they were useful, or functional. The functionalists believed

that Darwin’s theory applied to psychological characteristics too. Just as some

animals have developed strong muscles to allow them to run fast, the human brain, so

functionalists thought, must have adapted to serve a particular function in human

experience.

Although functionalism no longer exists as a school of psychology, its basic

principles have been absorbed into psychology and continue to influence it in many

ways. The work of the functionalists has developed into the field of evolutionary psychology, a contemporary perspective of

psychology that applies the Darwinian theory of natural selection to human and

animal behavior. You learn more about the perspective of evolutionary

psychology in the next section of this module.

Psychodynamics: The Foundation of Clinical Psychology

Perhaps the school of psychology that is most familiar to the general public is the

psychodynamic approach to understanding behavior, which was championed by Sigmund

Freud (1856–1939) and his followers. Psychodynamic psychology

is an approach to understanding human behavior that focuses on the

role of unconscious thoughts, feelings, and memories. Freud developed his

theories about behavior through extensive analysis of the patients that he treated in

his private clinical practice. Freud believed that many of the problems that his

patients experienced, including anxiety, depression, and sexual dysfunction, were the

result of the effects of painful childhood experiences that the person could no

longer remember.

Freud’s ideas were extended by other psychologists whom he influenced including Erik

Erikson (1902–1994). These and others who follow the psychodynamic approach believe

that it is possible to help the patient if the unconscious drives can be remembered,

particularly through a deep and thorough exploration of the person’s early sexual

experiences and current sexual desires. These explorations are revealed through talk

therapy and dream analysis, in a process called psychoanalysis.

The founders of the school of psychodynamics were primarily practitioners who worked

with individuals to help them understand and confront their psychological symptoms.

Although they did not conduct much research on their ideas, and although later, more

sophisticated tests of their theories have not always supported their proposals,

psychodynamics has nevertheless had substantial impact on the perspective of clinical

psychology and, indeed, on thinking about human behavior more generally. The

importance of the unconscious in human behavior, the idea that early childhood

experiences are critical, and the concept of therapy as a way of improving human

lives are all ideas that are derived from the psychodynamic approach and that remain

central to psychology.

Behaviorism: How We Learn

Although they differed in approach, both structuralism and functionalism were

essentially studies of the mind. The psychologists associated with the school of

behaviorism, on the other hand, were reacting in part to the difficulties

psychologists encountered when they tried to use introspection to understand

behavior. Behaviorism is a school of

psychology that is based on the premise that it is not possible to objectively

study the mind, and therefore that psychologists should limit their attention to

the study of behavior itself. Behaviorists believe that the human mind is a

“black box” into which stimuli are sent and from which responses are received. They

argue that there is no point in trying to determine what happens in the box because

we can successfully predict behavior without knowing what happens inside the mind.

Furthermore, behaviorists believe that it is possible to develop laws of learning

that can explain all behaviors.

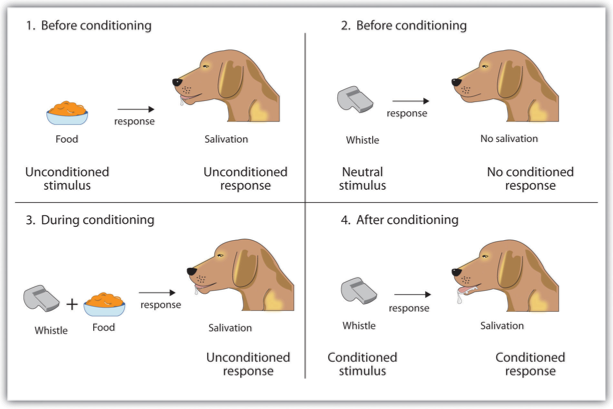

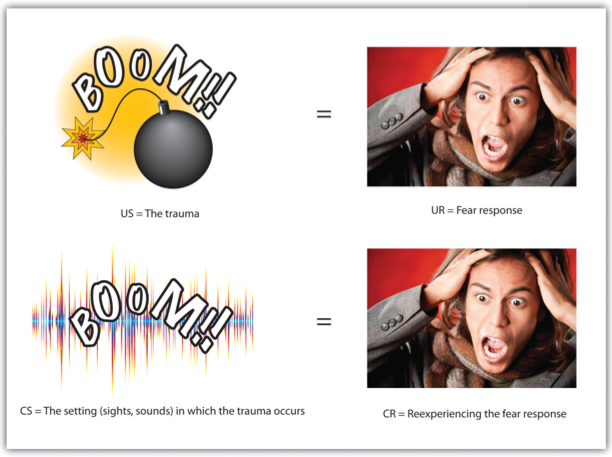

The first behaviorist was the American psychologist John B. Watson (1878–1958).

Watson was influenced in large part by the work of the Russian physiologist Ivan

Pavlov (1849–1936), who had discovered that dogs would salivate at the sound of a

tone that had previously been associated with the presentation of food. Watson and

other behaviorists began to use these ideas to explain how events that people and

animals experienced in their environment (stimuli) could

produce specific behaviors (responses). For instance, in

Pavlov’s research the stimulus (either the food or, after learning, the tone) would

produce the response of salivation in the dogs.

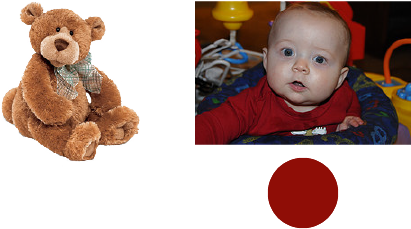

In his research Watson found that systematically exposing a child to fearful stimuli

in the presence of objects that did not themselves elicit fear could lead the child

to respond with a fearful behavior to the presence of the stimulus. In the best known

of his studies, an 8-month-old boy named Little Albert was used as the subject. Here

is a summary of the findings:

The baby was placed in the middle of a room; a white laboratory rat was placed near

him and he was allowed to play with it. The child showed no fear of the rat. In later

trials, the researchers made a loud sound behind Albert’s back by striking a steel

bar with a hammer whenever the baby touched the rat. The child cried when he heard

the noise. After several such pairings of the two stimuli, the child was again shown

the rat. Now, however, he cried and tried to move away from the rat. In line with the

behaviorist approach, Little Albert had learned to associate the white rat with the

loud noise, resulting in crying.

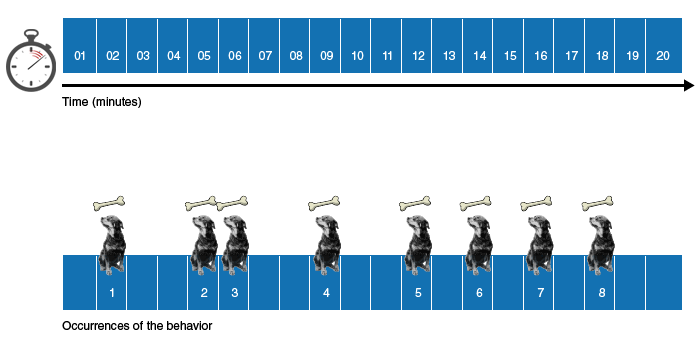

The most famous behaviorist was Burrhus Frederick (B. F.) Skinner (1904–1990), who

expanded the principles of behaviorism and also brought them to the attention of the

public at large. Skinner used the ideas of stimulus and response, along with the

application of rewards or reinforcements, to train pigeons

and other animals. He used the general principles of behaviorism to develop theories

about how best to teach children and how to create societies that were peaceful and

productive. Skinner even developed a method for studying thoughts and feelings using

the behaviorist approach.

The behaviorists made substantial contributions to psychology by identifying the

principles of learning. Although the behaviorists were

incorrect in their beliefs that it was not possible to measure thoughts and feelings,

their ideas provided new ideas that helped further our understanding regarding the

nature-nurture and mind-body debates. The ideas of behaviorism are fundamental to

psychology and have been developed to help us better understand the role of prior

experiences in a variety of areas of psychology.

Cognitive Development: The Brain and How it Thinks

During the first half of the twentieth century, evidence emerged that learning was

not as simple as it was described by the behaviorists. Several psychologists studied

how people think, learn and remember. And this approach became known as cognitive psychology, a field of psychology

that studies mental processes, including perception, thinking, memory, and

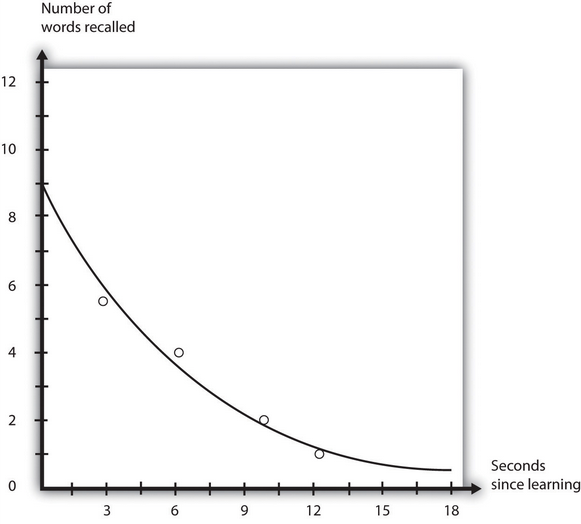

judgment. The German psychologist Hermann Ebbinghaus (1850–1909) showed how

memory could be studied and understood using basic scientific principles. The English

psychologist Frederick Bartlett also looked at memory but focused more on how our

memories can be distorted by our beliefs and expectations.

The two individuals from this time who arguably made the strongest impact on

contemporary cognitive psychology were two great students of child development: the

Swiss psychologist Jean Piaget (1896–1980) and the Russian psychologist Lev Vygotsky

(1896–1934).

Jean Piaget was a prolific writer, a brilliant systematizer, and a creative observer

of children. Using interviews and situations he contrived, he studied the thinking

and reasoning of children from their earliest days into adolescence. He is best known

for his theory that tracks the development of children’s thinking into a series of

four major stages, each with several substages. Within each stage, Piaget pointed to

behaviors and responses to questions that revealed how the developing child

understands the world. One of Piaget’s critical insights was that children are not

deficient adults, so when they do something or make a judgement that, in an adult,

might seem to be a mistake, we should not assume that it is a mistake from the

child’s perspective. Instead, the child may be using the knowledge and reasoning that

are completely appropriate at his or her particular age to make sense of the world.

For example, Piaget found that children often believe that other people know or can

see whatever they know or can see. So, if you show a young child a scene containing

several dolls, where a particular doll is visible to the child but blocked from your

view by a dollhouse, the child will simply assume that you can see the blocked doll.

Why? Because he or she can see it. Piaget called this thinking egocentrism, by which he meant that the child’s thinking

is centered in his or her own view of the world (not that the child is

selfish). If an adult made this error, we would find it odd. But it is quite

natural for the child, because prior to about 4 years of age, children do not

understand that different minds (theirs and yours) can know different things.

Egocentric thinking is normal and healthy for a two year old (though not for a

20-year-old).

During the same years that Piaget was interviewing children and trying to chart the

course of development, Russian psychologist Lev Vygotsky was struck by the rich

social influences that influenced and even guided cognitive development. Like Piaget,

Vygotsky observed children playing with one another, and he saw how children guide

each other to learn social rules and, through those, to improve self-regulation of

behavior and thoughts.

Vygotsky’s best known contribution was his analysis of the interactions of children

and parents that lead to the development of more and more sophisticated thinking. He

suggested that the effective parent or teacher is one who helps

the child reach beyond his or her current level of thinking by creating

supports, which Vygotsky’s followers called scaffolding. For example, if a teacher wants the child to learn the difference

between a square and a triangle, she might allow the child to play with cardboard

cutouts of the shapes, and help the child count the number of sides and angles on

each. This assisted exploration is a scaffold—a set of supports for the child who is

actively doing something—that can help the child do things and explore in ways that

would not be likely or even possible alone.

Both Piaget and Vygotsky emphasized the mental development of the child and gave

later psychologists a rich set of theoretical ideas as well as observable phenomena

to serve as a foundation for the science of the mind that blossomed in the middle and

late 20th century and is the core of 21st century psychology.

Humanistic Psychology: A New Approach

By the 1950s, a clear contrast existed between psychologists who favored

behaviorism which focused exclusively on behavior that is shaped by the

environment and those who favored psychodynamic psychology which focused on

mental unconscious processes to explain behavior. Many of the psychodynamic

therapists became disillusioned with the results of their therapy and began to

propose new ways of thinking about behavior in that unlike animals, human

behavior was not innately uncivilized as Freud, James and Skinner believed.

Humanism developed on the beliefs that humans are inherently good, have free

will to make decisions, and are motivated to seek and improve themselves to

their highest potential. Instead of focusing on what went wrong with people’s

lives as did the psychodynamic psychologists, humanists asked interesting

questions about what made a person “good.” Thus, a new approach to psychology

emerged called humanism, an early school of psychology

which emphasized that each person is inherently good and

motivated to learn and improve to become a healthy, effectively functioning

individual. Abraham Maslow and Carl Rogers are credited for developing

the humanistic approach in which they asked questions about what made a person

good.

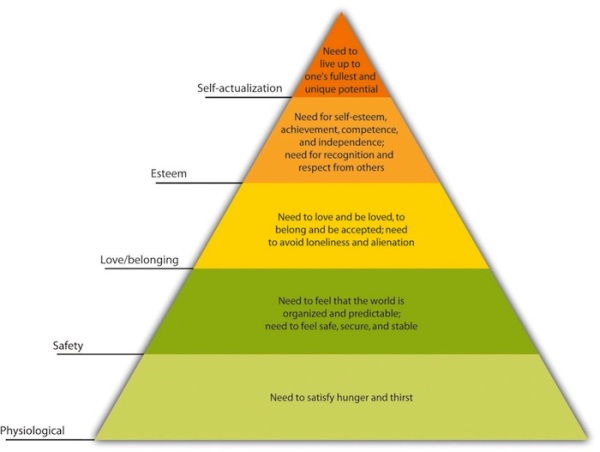

Abraham Maslow (1908–1970) developed the theory of self-motivation in which we all

have a basic, broad need to develop our special unique human

potential, which he called the drive for self-actualization. He proposed that, in order for us to achieve

self-actualization, several basic needs beginning with physiological needs of

hunger, thirst, and maintenance of other internal states of the body must first

be met. As the lower-level needs are satisfied, our internal motivation strives

to achieve higher-ordered needs such as safety, belonging and love needs and

self-esteem needs until we ultimately achieve self-actualization. Maslow’s

theory of hierarchy of needs represents our internal motivation to strive for

self-actualization. Achieving self-actualization meant that one has

achieved their unique and special human potential to be able to lead a positive

and fulfilling life.

Carl Rogers (1902–1987), originally a psychodynamic therapist, developed a new

therapy approach which he called client-centered therapy. This therapy approach

viewed the person, not as a patient, but rather as a client with more equal

status with the therapist. He believed that the client as well as every person

should be respected and valued for his or her unique and special abilities and

potential, and that the person had the ability to make conscious decisions and

free will to achieve one’s highest potential.

While the humanistic school of psychology has been criticized for its lack of

rigorous experimental investigation as being more of a philosophical approach, it has

influenced current thinking on personality theories and psychotherapy methods.

Furthermore, the foundations of the early school of humanism evolved into the

contemporary perspective of positive psychology, the scientific

study of optimal human functioning.

learn by doing

Psychologist Abraham Maslow introduced the concept of a hierarchy of needs,

which suggests that people are motivated to fulfill basic needs before moving

on to other, more advanced needs. Consider how this may influence our

development, motivation, and accomplishments. Choose which level of needs would

best explain the scenario below.

As you may have noticed in the six early schools of psychology, each attempted to answer

psychological questions with a single approach. While some attempted to build a big theory

around their approach (and some did not even attempt), no one school was successful. By the

mid-20th century, the field of psychology was still a very young science, but it was

gaining a lot of diverse attention and popularity. Psychologists began to study mental

processes and behavior from their own specific points of interests and views. Thus, some of

the specific viewpoints became known as perspectives from which to

investigate a specific psychological topic.

Today, contemporary psychology reflects several major perspectives such

biological/neuroscience, cognitive, behavioral, social, developmental, clinical, and

individual differences/personality. These are not a complete list of perspectives and your

instructor may introduce others. What’s important to know is that today all psychologists

believe that there is no one specific perspective with which to study psychology, but

rather any given topic can be approached from a variety of perspectives. For example,

investigating how an infant learns language can be studied from all of the different

perspectives that could provide information from a different viewpoint about the child’s

learning. Also as perspectives become more specific, we see that the perspectives are

interconnected with each other, meaning that it is difficult to study any topic on human

thought or behavior from just one perspective without considering the complex influence of

information from other perspectives.

| Contemporary Perspectives of Psychology |

|---|

| Perspective |

Major Emphasis |

Examples of Research Questions |

| Behavioral Neuroscience |

Genetics and the links among brain, mind, and behavior |

What brain structures influence behavior? If a brain function is altered, how is

behavior affected. To what extent do genes influence behavior when the environment is

manipulated? |

| Biological |

Relationship between bodily systems and chemicals and how they influence behavior

and thought |

How do hormones and neurotransmitters affect thought and behavior? |

| Cognitive |

Thinking, decision-making, problem-solving, memory, language, and information

processing |

What are the processes for developing language, memory, decision-making, and

problem-solving? How does thinking affect behavior? What is intelligence, and how is

it determined? What affects intelligence, memory, language, and information

processing? |

| Social |

Concepts of self and social interaction and how they differ across cultures and

shape behavior |

How do social stresses affect self-concept? How do social conditions contribute to

destructive behavior? How are behaviors affected by cultural differences? |

| Developmental |

How and why people change or remain the same over time from conception to death |

How does a person’s development from conception to death change or stay the same in

the biosocial, cognitive and psychosocial domains? What changes are universal or

unique to a person? Which periods of life are critical or sensitive for certain

development? |

| Clinical |

Diagnosis and treatment of mental, emotional, and behavioral disorders and promotion

of psychological health |

What are the cognitive and behavioral characteristics and symptoms of a mental or

behavioral abnormality? What treatment methods are most effective for the various

emotional and behavioral disorders? |

| Individual Differences/ Personality |

Uniqueness and differences of people and the consistencies in behavior across time

and situations. |

What determines personality? How does personality change or remain the same as one

ages or experiences social or environmental situations? |

|

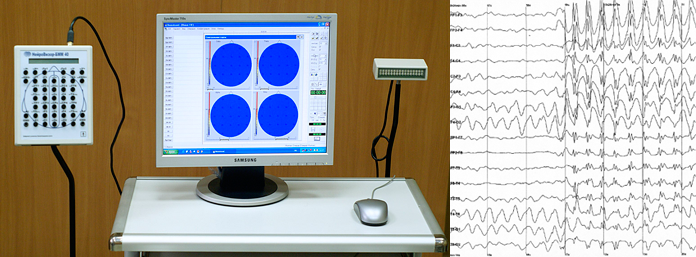

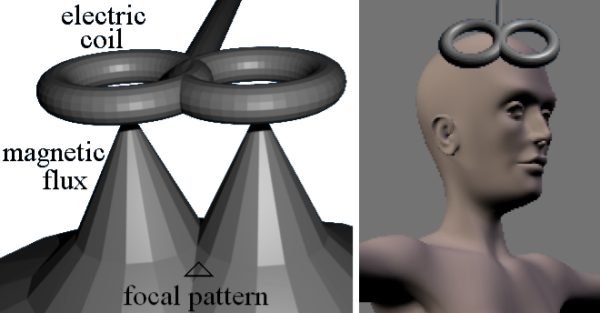

Behavioral Neuroscience

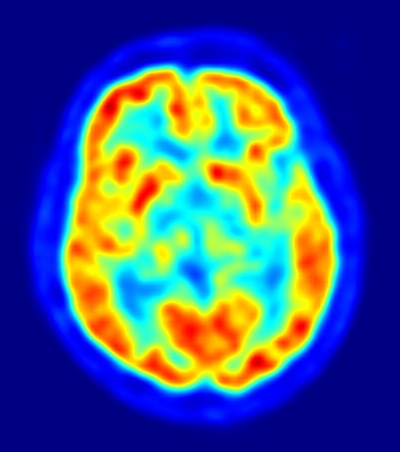

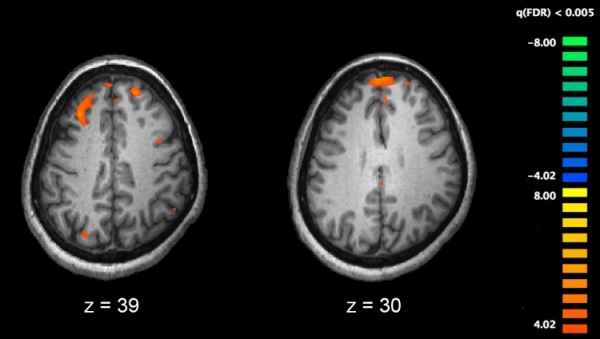

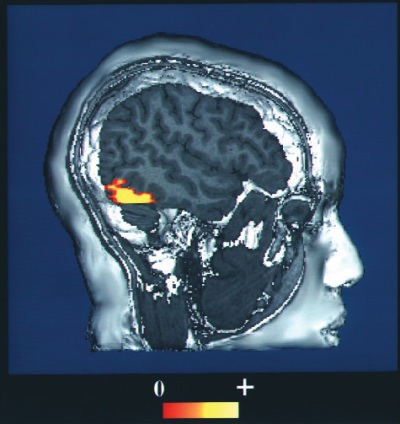

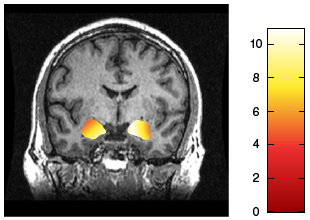

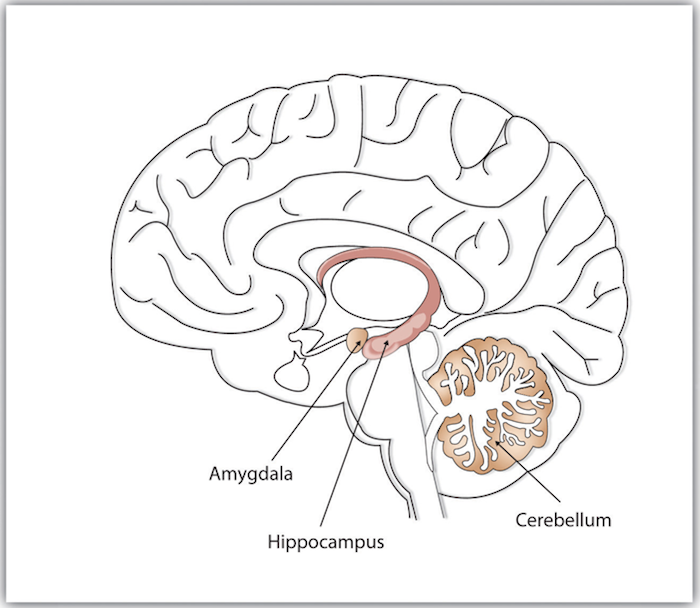

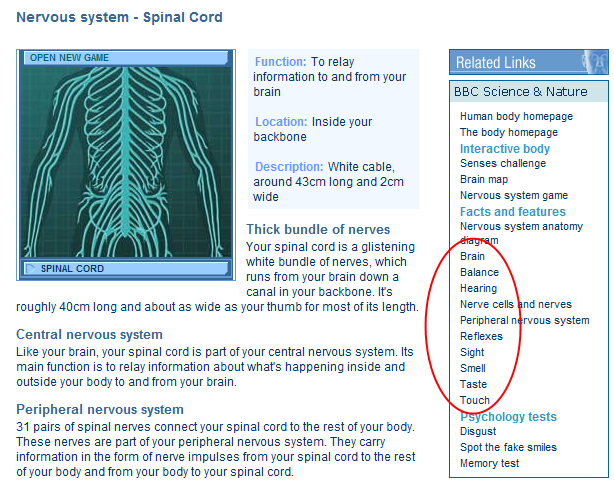

Behavioral neuroscience studies the links among the brain, mind, and behavior. This

perspective used to be called psychobiological psychology, which studied the

biological roots such as brain structure and brain activity of behavior. But due to

the advancements in our ability to view the intricate workings of the brain, called

neuroimaging, the name behavioral neuroscience is now used for this broad discipline.

Neuroimaging is the use of various

techniques to provide pictures of the structures and functions of the living

brain. And as you read about the following contemporary psychological

perspectives, you will see how interconnected these perspectives are, largely due to

neuroimaging techniques.

For example, neuroimaging techniques are used to study brain functions in learning,

emotions, social behavior and mental illness which each have their own specialty

perspective (see the descriptions of these perspectives below). Also the two

perspectives of behavioral neuroscience and biological psychology are closely

interconnected in that the uses of neuroimaging techniques such as electrical brain

recordings enable biological psychologists to study the structure and functions of

the brain. Another example is the study of behavioral genetics which is the study of

how genes influence cognition, physical development and behavior.

Another related perspective is evolutionary psychology, which

supports the idea that the brain and body are products of

evolution and that inheritance plays an important role in shaping thought and

behavior. This perspective developed from the functionalists’ basic

assumption that many human psychological systems, including memory, emotion and

personality, serve key adaptive functions called fitness characteristics.

Evolutionary psychologists theorize that fitness characteristics have helped humans

to survive and reproduce throughout the centuries at a higher rate than do other

species who do not have the same fitness characteristics. Fitter organisms pass on

their genes more successfully to later generations, making the characteristics that

produce fitness more likely to become part of the organism’s nature than

characteristics that do not produce fitness. For example, evolutionary theory

attempts to explain many different behaviors including romantic attraction, jealousy,

stereotypes and prejudice, and psychological disorders. The evolutionary perspective

is important to psychology because it provides logical explanations for why we have

many psychological characteristics.

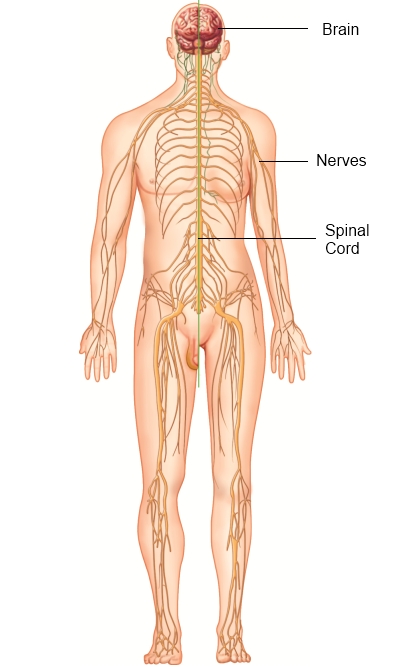

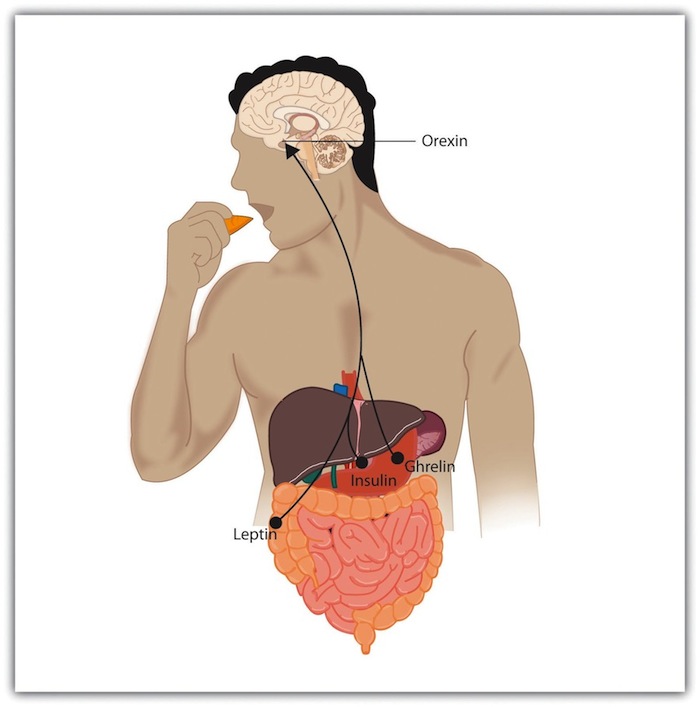

Biological Psychology

Closely related to behavioral neuroscience, the perspective of biological psychology

focuses on studying the connections between bodily systems such as the nervous and

endocrine systems and chemicals such as hormones and their relationships to behavior

and thought. Biological research on the chemicals produced in the body and brain have

helped psychologists to better understand psychological disorders such as depression

and anxiety and the effects of stress on hormones and behavior.

Cognitive Psychology

Cognitive psychology is the study of how we think, process information and solve

problems, how we learn and remember, and how we acquire and use language. Cognitive

psychology is interconnected with other perspectives that study language, problem

solving, memory, intelligence, education, human development, social psychology, and

clinical psychology.

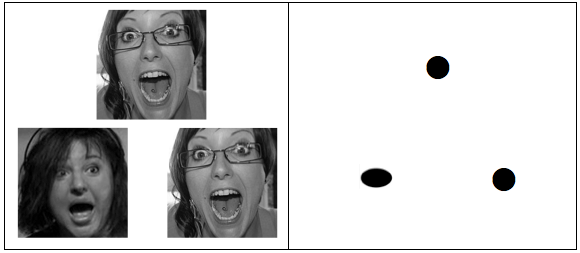

Starting in the 1950s, psychologists developed a rich and technically complex set of

ideas to understand human thought processes, initially inspired by the same insights

and advances in information technology that produced the computer, cell phone and

internet. As technology advanced, so did cognitive psychology. We are now able to see

the brain in action using neuroimaging techniques. These images are used to diagnose

brain disease and injury, but they also allow researchers to view information

processing as it occurs in the brain, because the processing causes the involved area

of the brain to increase metabolism and show up on the scan such as the functional

magnetic resonance imaging (fMRI). We discuss the use of neuroimaging techniques in

many areas of psychology in the units to follow.

Social Psychology

The field of social psychology is the study of how social

situations and cultures in which people live influence their thinking, feelings and

behavior. Social psychologists are particularly concerned with how people perceive

themselves and others, and how people influence each other’s behavior. For instance,

social psychologists have found that we are attracted to others who are similar to us

in terms of attitudes and interests. We develop our own beliefs and attitudes by

comparing our opinions to those of others and we frequently change our beliefs and

behaviors to be similar to people we care about.

Social psychologists are also interested in how our beliefs, attitudes and behaviors

are influenced by our culture. Cultures influence every aspect of our lives. For

example, fundamental differences in thinking, feeling and behaving exist among people

of Western cultures (such as the United States, Canada, Western Europe, Australia,

and New Zealand) and East Asian cultures (such as China, Japan, Taiwan, Korea, India,

and Southeast Asia). Western cultures are primarily oriented toward individualism, which is about valuing the self and one’s

independence from others, sometimes at the expense of others. The East Asian

culture, on the other hand, is oriented toward interdependence, or collectivism, which focuses on developing harmonious

social relationships with others, group togetherness and connectedness, and duty

and responsibility to one’s family and other groups.

As our world becomes more global, sociocultural research will become more interconnected

with the research of other psychological perspectives such as biological, cognitive,

personality, developmental and clinical.

Developmental Psychology

Developmental psychology is the study of the

development of a human being from conception until death. This perspective

emphasizes all of the transformations and consistencies of human life. Three major

domains or aspects of human life, cognitive, physical and socioemotional, are

researched as one ages. The cognitive domain refers to all of the mental processes

that a person uses to obtain knowledge or think about the environment. The physical

domain refers to all the growth and changes that occur in a person’s body and the

genetic, nutritional, and health factors that affect that growth and change. And the

socioemotional development includes development of emotions, temperament, and social

skills. Developmentalists study how individuals change or remain the same over time

in each of these three domains. It is easy to see how the perspective of

developmental psychology is interconnected with all of the other major contemporary

perspectives because of the overlapping and all encompassing aspects of the

developmental perspective.

Clinical psychology

Clinical psychology focuses on the diagnosis and treatment of mental, emotional and

behavioral disorders and ways to promote psychological health. This field evolved from the

early psychodynamic and humanistic schools of psychology. While the clinical psychology

perspective emphasizes treating individuals so that they may lead fulfilling and productive

lives, clinical psychologists also conduct research to discover the origins of mental and

behavioral disorders and effective treatment methods. The clinical psychology perspective

is closely interconnected to behavioral neuroscience and biological psychology.

Individual differences: Personality

Personality psychology is the study of the differences and uniqueness of people and the

influences on a person’s personality. Researchers in this field study whether personality

traits change as we age or stay the same, something that developmental psychologists also

study. Researchers interested in personality also study how environmental influences such

as traumatic events affect personality.

learn by doing

Instructions: Imagine that you are a psychologist and you want

to investigate specific behaviors of a person with Alzheimer’s disease. You have a

team of psychologists who represent several contemporary perspectives in psychology

to help you explore information as to the origin, symptoms, prevalence, influences

and causes of this brain disease and the impact on family members who care for a

relative with Alzheimer’s disease. Read the following scenario about a person who had

Alzheimer’s disease.

Alzheimer’s disease (AD), the most common type of dementia, is a

steady and gradual progressive brain disorder that damages and destroys brain

cells. Eventually Alzheimer’s disease progresses to the point where the person

requires full nursing care. Ronald Reagan, who was president of the United States

from 1981 to 1989, announced in 1994 that he had Alzheimer’s disease. He died 10

years later at age 93. Despite extensive research, psychologists still have many

questions to be researched about this fatal disease.

Read each set of questions. While some of these sets of questions could be researched

by different psychological perspectives, try to determine which psychological

perspective would most likely want to provide answers for

each set of questions. Your team of psychologists represents the following

perspectives and only one perspective is the correct answer for each set of

questions.

Challenge Questions

As you can see, psychologists from all different contemporary perspectives can

contribute to the scientific knowledge of Alzheimer’s disease, and for that matter,

any kind of research pertaining to humans and animals.

Psychology is not one discipline but rather a collection of many subdisciplines that all

share at least some common perspectives that work together to exchange knowledge to form a

coherent discipline. Because the field of psychology is so broad, students may wonder which

areas are most suitable for their interests and which types of careers might be available

to them. The following figure will help you consider the answers to these questions. Click

on any of the labeled, blue circles to learn more about each discipline.

Photo courtesy of longislandwins (CC-BY-2.0).

learn by doing

You can learn more about these different subdisciplines of psychology and the careers

associated with them by visiting the American Psychological Association (APA)

website.

Step 1: Go to the APA

website.

On this APA Home webpage, notice the various types of information.

Step 2: Find the box titled “Quick Links” on the APA

Homepage.

Click on the link titled Divisions.

Step 3: On the APA site, search for the topic “Undergraduate

Education.” Find the “Psychology as a Career” webpage to learn about what employers

need from an employee, and then answer the following questions.

Now search for the topic “Careers in Psychology” on the APA website. Here you can

read interesting information about the field of psychology. This section provides a

long list of subfields in psychology that psychologists specialize in. Read about

some of the interesting job tasks that psychologists perform in some of the

subfields, and then complete the following statements by identifying the subfield

that corresponds with it job tasks.

Psychologists aren’t the only people who seek to understand human behavior and solve

social problems. Philosophers, religious leaders, and politicians, among others, also

strive to provide explanations for human behavior. But psychologists believe that

research is the best tool for understanding human beings and their relationships with

others. Rather than accepting the claim of a philosopher that people do (or do not) have

free will, a psychologist would collect data to empirically test whether or not people

are able to actively control their own behavior. Rather than accepting a politician’s

contention that creating (or abandoning) a new center for mental health will improve the

lives of individuals in the inner city, a psychologist would empirically assess the

effects of receiving mental health treatment on the quality of life of the recipients.

The statements made by psychologists are based on an empirical study. An empirical study

is results of verifiable evidence from a systematic collection and

analysis of data that has been objectively observed, measured, and undergone

experimentation.

In this unit you will learn how psychologists develop and test their research ideas; how

they measure the thoughts, feelings, and behavior of individuals; and how they analyze

and interpret the data they collect. To really understand psychology, you must also

understand how and why the research you are reading about was conducted and what the

collected data mean. Learning about the principles and practices of psychological

research will allow you to critically read, interpret, and evaluate research.

In addition to helping you learn the material in this course, the ability to interpret

and conduct research is also useful in many of the careers that you might choose. For

instance, advertising and marketing researchers study how to make advertising more

effective, health and medical researchers study the impact of behaviors such as drug use

and smoking on illness, and computer scientists study how people interact with

computers. Furthermore, even if you are not planning a career as a researcher, jobs in

almost any area of social, medical, or mental health science require that a worker be

informed about psychological research.

Psychologists study behavior of both humans and animals, and the main purpose of this

research is to help us understand people and to improve the quality of human lives. The

results of psychological research are relevant to problems such as learning and memory,

homelessness, psychological disorders, family instability, and aggressive behavior and

violence. Psychological research is used in a range of important areas, from public

policy to driver safety. It guides court rulings with respect to racism and sexism as in

the 1954 case of Brown v. Board of Education, as well as court procedure, in the use of

lie detectors during criminal trials, for example. Psychological research helps us

understand how driver behavior affects safety such as the effects of texting while

driving, which methods of educating children are most effective, how to best detect

deception, and the causes of terrorism.

Some psychological research is basic research. Basic research is research that answers fundamental questions about behavior. For instance,

bio-psychologists study how nerves conduct impulses from the receptors in the skin to

the brain, and cognitive psychologists investigate how different types of studying

influence memory for pictures and words. There is no particular reason to examine such

things except to acquire a better knowledge of how these processes occur. Applied

research is research that investigates issues that have implications

for everyday life and provides solutions to everyday problems. Applied research

has been conducted to study, among many other things, the most effective methods for

reducing depression, the types of advertising campaigns that serve to reduce drug and

alcohol abuse, the key predictors of managerial success in business, and the indicators

of effective government programs, such as Head Start.

Basic research and applied research inform

each other, and advances in science occur more rapidly when each type of research is

conducted. For instance, although research concerning the role of practice on memory for

lists of words is basic in orientation, the results could potentially be applied to help

children learn to read. Correspondingly, psychologist-practitioners who wish to reduce

the spread of AIDS or to promote volunteering frequently base their programs on the

results of basic research. This basic AIDS or volunteering research is then applied to

help change people’s attitudes and behaviors.

One goal of research is to organize information into meaningful statements that can be

applied in many situations.

A

theory is an integrated set of principles that

explains and predicts many, but not all, observed relationships within a given domain of

inquiry. One example of an important theory in psychology is the stage theory of

cognitive development proposed by the Swiss psychologist Jean Piaget. The theory states

that children pass through a series of cognitive stages as they grow, each of which must be

mastered in succession before movement to the next cognitive stage can occur. This is an

extremely useful theory in human development because it can be applied to many different

content areas and can be tested in many different ways.

Good theories have four important characteristics. A good theory is:

-

General, meaning it summarizes different outcomes.

-

Parsimonious, meaning it provides the simplest possible account

of those outcomes.

-

Provides ideas for future research.

-

Falsifiable, meaning that the variables of interest can be

adequately measured and the relationships between the variables that are predicted by

the theory can be shown through research to be incorrect.

Piaget’s stage theory of cognitive development meets all four characteristics of a good

theory. First, it is general in that it can account for developmental changes in behavior

across a wide variety of domains, and second, it does so parsimoniously—by hypothesizing a

simple set of cognitive stages. Third, the stage theory of cognitive development has been

applied not only to learning about cognitive skills but also to the study of children’s

moral and gender development. And finally, the stage theory of cognitive development is

falsifiable because the stages of cognitive reasoning can be measured and because if

research discovers, for instance, that children learn new tasks before they have reached

the cognitive stage hypothesized to be required for that task, then the theory will be

shown to be incorrect.

No single theory is able to account for all behavior in all cases. Rather, theories are

each limited in that they make accurate predictions in some situations or for some people

but not in other situations or for other people. As a result, there is a constant exchange

between theory and data: Existing theories are modified on the basis of collected data, and

the new modified theories then make new predictions that are tested by new data, and so

forth. When a better theory is found, it will replace the old one. This is part of the

accumulation of scientific knowledge as a result of research.

When psychologists have a question that they want to research, it usually comes from a

theory based on other’s research reported in scientific journals. Recall that a

theory is based on principles that are general and can be applied to many situations

or relationships. Therefore, when a scientist has a research question to study, the

question must be stated in a research hypothesis, which is a precise statement of the presumed relationship among specific

parts of a theory. Furthermore, a research hypothesis is a

specific and falsifiable prediction about the relationship between or among two or

more variables, where a variable is any attribute that can assume different values among different people or across

different times or places.

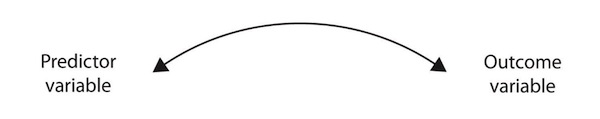

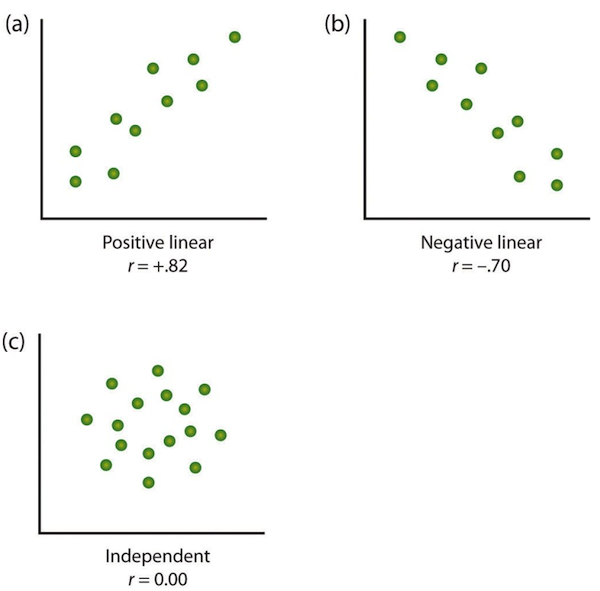

The research hypothesis states the existence of a relationship between the variables of

interest and the specific direction of that relationship. For instance, the research

hypothesis “Using marijuana will reduce learning” predicts that there is a

relationship between a variable “using marijuana” and another variable called

“learning.” Similarly, in the research hypothesis “Participating in psychotherapy

will reduce anxiety,” the variables that are expected to be related are

“participating in psychotherapy” and “level of anxiety.”

When stated in an abstract manner, the ideas that form the basis of a research hypothesis

are known as conceptual variables. Conceptual variables are abstract ideas that form the basis of research hypotheses.

Sometimes the conceptual variables are rather simple—for instance, age, gender, or

weight. In other cases the conceptual variables represent more complex ideas, such as

anxiety, cognitive development, learning, self-esteem, or sexism.

The first step in testing a research hypothesis involves turning the conceptual variables

into measured variables, which are variables

consisting of numbers that represent the conceptual variables. For instance, the

conceptual variable “participating in psychotherapy” could be represented as the measured

variable “number of psychotherapy hours the patient has accrued” and the conceptual

variable “using marijuana” could be assessed by having the research participants rate, on a

scale from 1 to 10, how often they use marijuana or by administering a blood test that

measures the presence of the chemicals in marijuana.

Psychologists use the term operational definition to refer to a precise

statement of how a conceptual variable is turned into a measured variable. The following table lists some

potential operational definitions for conceptual variables that have been used in

psychological research. As you read through this list, note that in contrast to the

abstract conceptual variables, the operational definitions are measurable and very

specific. This specificity is important for two reasons. First, more specific definitions

mean that there is less danger that the collected data will be misunderstood by others.

Second, specific definitions will enable future researchers to replicate the research.

| Examples of Some Conceptual Variables Defined as Operational Definitions for Psychological Research |

|---|

| From Flat World Knowledge, Introduction to Psychology,

v1.0, CC-BY-NC-SA. |

| Conceptual Variable |

Operational Definitions |

| Aggression |

Number of presses of a button that administers shock to another student; number of

seconds taken to honk the horn at the car ahead after a stoplight turns

green |

| Interpersonal attraction |

Number of inches that an individual places his or her chair away from another

person; number of millimeters of pupil dilation when one person looks at

another |

| Employee satisfaction |

Number of days per month an employee shows up to work on time; rating of job

satisfaction from 1 (not at all satisfied) to 9 (extremely satisfied) |

| Decision-making skills |

Number of groups able to correctly solve a group performance task; number of seconds

in which a person solves a problem |

| Depression |

Number of negative words used in a creative story; number of appointments made with

a psychotherapist |

|

learn by doing

One of the keys to developing a well-designed research study is to precisely define

the conceptual variables found in a hypothesis. When conceptual variables are turned

into operational variables in a hypothesis, it then becomes a testable hypothesis. In

this activity, read each of the following statements and answer its accompanying

question to:

- distinguish the conceptual variable from its operational definition or variable

to be tested and

- determine whether the statement describing the variables contain the

characteristics for a testable hypothesis.

did I get this

Now to make sure that you can identify the characteristics of a hypothesis and

distinguish its conceptual variables from operational definitions used in a research

study, choose an answer that correctly completes each of the following statements.

All scientists (whether they are physicists, chemists, biologists, sociologists, or

psychologists) are engaged in the basic processes of collecting data and drawing

conclusions about those data. The methods used by scientists have developed over many

years and provide a common framework for developing, organizing, and sharing

information. The scientific method is the

set of assumptions, rules, and procedures scientists use to conduct

research.

In addition to requiring that science be empirical, the

scientific method demands that the procedures used are objective, or free from the personal bias or emotions of the scientist. The

scientific method proscribes how scientists collect and analyze data, how they draw

conclusions from data, and how they share data with others. These rules increase

objectivity by placing data under the scrutiny of other scientists and even the

public at large. Because data are reported objectively, other scientists know exactly

how the scientist collected and analyzed the data. This means that they do not have

to rely only on the scientist’s own interpretation of the data; they may draw their

own, potentially different, conclusions.

In the following activity, you learn about a model presenting a five-step process of

scientific research in psychology. A researcher or a small group of researchers

formulate a research question and state a hypothesis, conduct a study designed to

answer the question, analyze the resulting data, draw conclusions about the answer to

the question, and then publish the results so that they become part of the research

literature found in scientific

journals.

Because the research literature is one of the primary sources of new research

questions, this process can be thought of as a cycle. New research leads to new

questions and new hypotheses, which lead to new research, and so on.

This model also indicates that research questions can originate outside of this cycle

either with informal observations or with practical problems that need to be solved.

But even in these cases, the researcher begins by checking the research literature to

see if the question had already been answered and to refine it based on what previous

research had already found.

learn by doing

All scientists use the the scientific method which is a set of basic processes

performed in the same order for conducting research. Using the following

diagram of the scientific method, label each of the research steps in the

correct order that scientists use to conduct scientific studies.

learn by doing

Now imagine that you are a research psychologist and you want to conduct a

study to find out if there are any negative effects of talking on a cell phone

while driving a car. What would you do first to begin your study? How would you

know if your study might provide any new information? How would you go about

conducting your study? What would you do after you have completed your study?

These are questions that every researcher must answer in order to properly

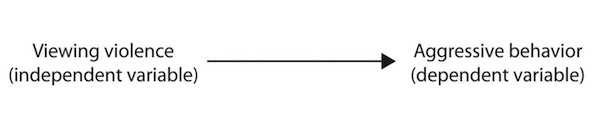

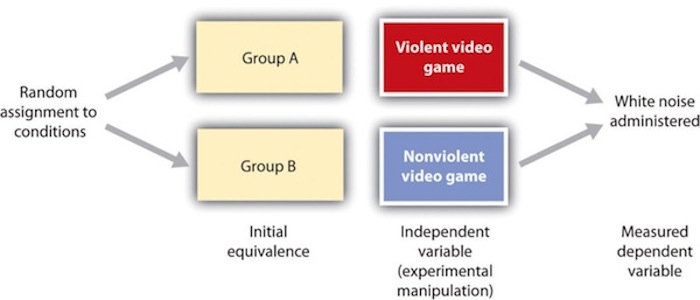

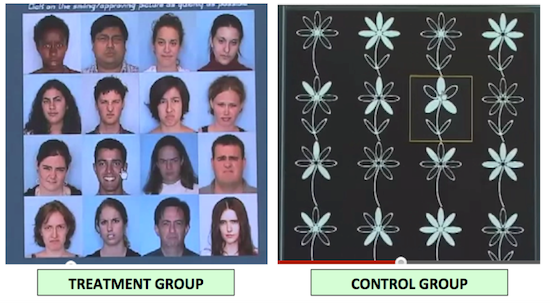

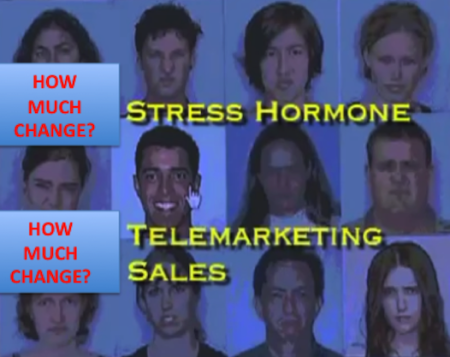

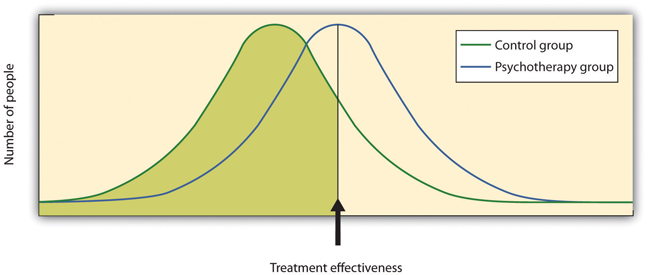

conduct a scientific study. As the researcher for your study on cell phone